Building and viewing digital twins of robot arms over 5G

07/12/22 | 10 min read

This article will provide a technical view of the solution we built to visualize a Stäubli TX2-60L, further known as “the robot arm”, in all its glory remotely over 5G. To forewarn readers that missed the word “technical”: this article will be technical, but not deeply so and will avoid showing code.

Goals

For our solution, we had to meet a few goals:

- Allow assessing the status of the robot arm, including temperatures, operating mode, safety status, errors, messages, and more – so problems can be diagnosed remotely.

- Allow following real-world physical movements of the robot arm in real time – so remote technicians can follow what the robot is doing (and no streaming video through a camera!).

- Allow sending commands back to the robot arm – so remote technicians can ensure safety (lower movement velocity, lock movement, …) and help fix the problem.

- Facilitate remote support without requiring the robot arm to be reachable over the public internet – many industries, such as pharma, have tight security in place and prefer not to open their network to the public internet any further than necessary.

Now that our goals are clear, let’s look at the hardware setup we worked with for our initial version of the solution.

Components

Hardware setup

The hardware setup was composed of the following components:

- A TX2-60L ("the robot arm") and a matching CS9 controller, located at a laboratory.

- One or more HoloLens 2 devices, worn by remote technicians to provide remote support, which work externally from the workplace.

- Private internal organisation network that connects the robot arm to the rest of the organisation, not reachable over the public network.

- External public network, such as a home network connecting the remote technician to the public internet.

Now that we've established what we were trying to accomplish and what we were working with, we can move on to how we designed the solution to reach these goals.

Solution design

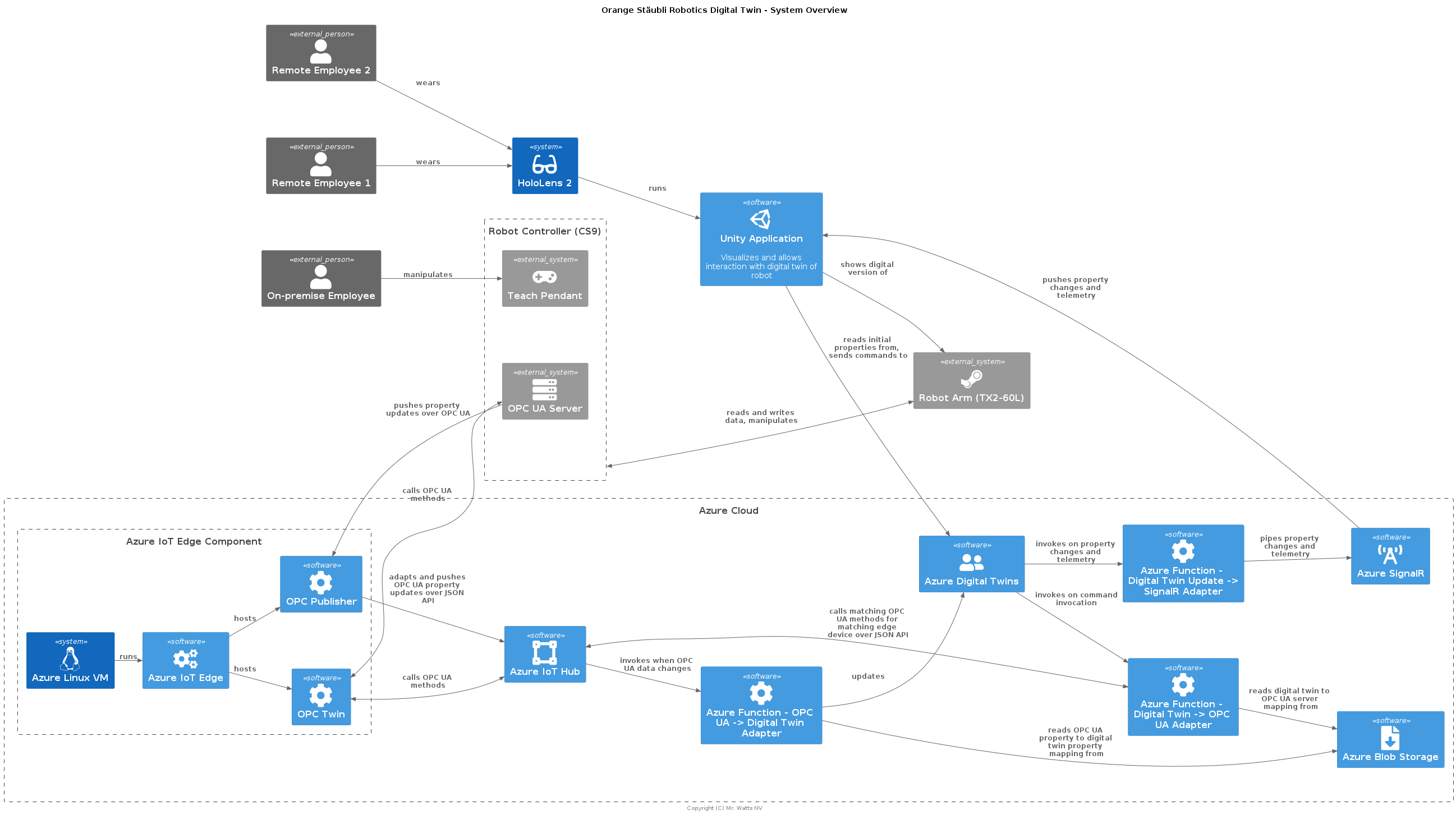

Before we break down the different components in the solution, let’s take a peek at the complete picture:

Let’s break it down.

TX2-60L, CS9, and OPC Unified Architecture (OPC UA)

The first problem that came to mind when designing the solution was: how are we going to extract information from the robot arm, such as its status? Can it even report this kind of information? Well, yes! OPC Unified Architecture (OPC UA for short) is an open standard supported by several types of sensor and industrial devices to facilitate exchange of information – in both directions – with other devices.

In our case, the TX2-60L was connected to a CS9 controller, which can act as OPC UA server. OPC UA ticked all the boxes we needed:

- Facilitates sending property changes to interested parties.

- Facilitates processing and applying incoming change requests and commands.

- Supports authentication, encryption and signing, for (hopefully) obvious reasons.

- Can work reliably over standard network protocols (TCP/IP), benefitting from battle-tested software.

That clears up how to get data out of the robot arm, but where would we send it to?

Azure Cloud

Large organisations often already have cloud infrastructure set up, configured to meet their stringent requirements. In our case we started by focussing on Azure, since it’s common in large organisations, and the HoloLens 2 fits well in the Microsoft ecosystem.

The use of Azure gives us access to several components:

- Azure IoT Hub – Central management hub for one or more (“edge” / IoT) devices, allowing things such as pushing updates, installing new containerized applications, and more.

- Azure VM – Virtual machine or computer.

- Azure Functions – Plainly put bits of code or mini-applications that are triggered as some event happens to do something with them.

- Azure Digital Twins – Imagine a document database or a spreadsheet with a list of properties and their values, each such list representing a digital copy of the same data on a physical device.

- Azure Blob Storage – Storage location blobs (bits of data).

- Azure SignalR – Service that allows clients (smartphones apps, Unity applications, desktops, …) to connect to one another and exchange data, among other things, for various reasons.

- Azure ExpressRoute – Allows connecting a private organisation network to Azure without going over the public internet. (This requires the help of a specific network provider, such as Orange.)

So, to answer the original question: Azure was where we’d send our data to. Now that the destination is clear, we can look at the Azure components and their function in detail.

Azure IoT Hub

Furthermore, the hub also supports forwarding events, such as telemetry and property updates – think of temperatures of the robot arm changing, its physical position updating, and more) -, to other parts of Azure.

Azure VM - IoT Edge Device / OPC Publisher / OPC Twin

Azure IoT Edge can be viewed as a software layer on top of containers (usually Docker) that manages containers based on configurations pushed from Azure so you can configure them easily remotely. As such, we used it to manage the OPC Publisher and OPC Twin.

The OPC Publisher allows subscribing to updates from OPC UA servers (which our robot arm is) and sending them to an Azure IoT Hub. This is the first part in the stack that ingests data from the robot arm.

In turn, the OPC Twin is a related component that we mainly used to go the other way and send commands back to the robot arm.

Azure Digital Twins

A core strength of digital twins is having a complete digital overview of what the status of your power plant, factory, laboratory, or fleet of drones, is – to give a couple examples.

In our case we created a digital twin for both the TX2-60L as well as its CS9 controller, mapping all the properties we needed (temperatures, joint positions, and more).

Azure Blob Storage

Azure Blob Storage is a storage location for “blobs” or bits of data. Our use of it remained limited to storing some configuration files our Azure Functions needed to operate.

Azure SignalR

From the options available to us (service bus, event grids, …), we chose SignalR because it is targeted at wide platform support, low latency, and seemed the best fit for getting events to the HoloLens 2 as quickly as possible.

Azure Functions

Azure Functions are bits of code that execute a task in response to some sort of event. We had a couple of them:

- OPC UA → Digital Twin Adapter — Called when robot arm (OPC UA) data comes in through the IoT Hub. Updates the digital twin of the robot accordingly (since both use different formats).

- Digital Twin → OPC UA Adapter — Called when someone using the HoloLens 2 executes a command on a digital twin. Converts this request to an actual method invocation on the IoT Hub, in turn sending it to the OPC Twin, which maps it to the appropriate OPC UA method call.

- Digital Twin Update → SignalR Adapter — Called when the digital twin is updated. Sends information on these updates over SignalR to the HoloLens 2 (clients).

Azure ExpressRoute

As the robot arm was not available over the public internet. Together with Orange we set up an Azure ExpressRoute, which funnels data over a private tunnel from Azure to a private network. This private tunnel connected the private network of the robot arm to Azure, and additionally went over Orange’s 5G network (more on that below).

Data Flow Summary

-

- TX2-60L (robot arm) changes in some way (temperature goes up, it moves, …).

- CS9, acting as OPC UA server, sees this and pushes update to OPC Publisher (IoT Edge Device in Azure VM).

- OPC Publisher pushes update to IoT Hub.

- IoT Hub pushes update to Azure Function (OPC UA → Digital Twin Adapter).

- Azure Function applies update to digital twin.

- Azure Function (Digital Twin Update -> SignalR Adapter) gets triggered and pushes data over SignalR.

- HoloLens 2 receives update and updates application state accordingly (updating visible temperatures, moving digital robot, …).

-

- HoloLens 2 sends command to digital twin.

- Azure Function (Digital Twin → OPC UA Adapter) is triggered and sends command to the IoT Hub for the appropriate device.

- IoT Hub sends command to the OPC Twin (IoT Edge Device in Azure VM).

- OPC Twin sends OPC UA method invocation to CS9.

- CS9 performs command and/or manipulates TX2-60L (robot arm).

Real-time? Low Latency? Private Network? 5G.

As the private tunnel of the ExpressRoute needs to be provisioned by a provider, this was also the time for Orange to let 5G shine, as it ticked all boxes of allowing a fully private network, having the necessary low latency, and providing enough bandwidth.

Summary

And voilà, real-time updates in a secure fashion over 5G, in two directions! The neat thing about this solution is that:

- It works with any device that supports communicating using OPC UA – think of drones, sensors, CNC machines, and more.

- It works with any client that can communicate over SignalR, which is widely available for many programming languages – you can just as easily build a mobile app to view the same data or build dashboards summarizing the status of all digital twins.

- It scales to as many devices and digital twins as you want in your organisation – want to visualize your entire production line digitally? (We do! So, contact us if you do, too 😉!)

Shout out to Orange and Stäubli for helping us build this awesome solution!